Image Sensors World Go to the original article...

Hsinchu, Taiwan, March 8th 2022 – Artilux, the leader in CMOS-based SWIR optical sensing technology, demonstrated a multi-spectral optical sensing platform compatible with NIR/SWIR vertical-cavity surface-emitting laser (VCSEL) arrays, light emitting diodes (LED), and CMOS-based GeSi (Germanium-Silicon) sensors. This compact optical sensing platform is the industry-leading solution targeted to embrace the rapidly growing TWS and wearables markets in addition to unlock diversified scenarios in digital health.

In light of the increasingly popular wide spectrum (NIR/SWIR) optical sensing applications starting from vital sign monitoring in smartwatches to skin detection in TWS earbuds, cost-effective and energy-efficient optical components including LED, VCSEL, edge-emitting lasers, and SWIR sensors have become the crucial factors to meet such rising user demands. The widely discussed skin detection function in TWS earbuds requires SWIR sensors to perform precise in-ear detection and to deliver seamless listening experiences, while at the same time sustaining long battery life. Such product requires SWIR wavelength, lower power-consumption, lower cost, smaller size with higher sensitivity. The announcement aims to deliver a compact and cost-effective multi-spectral optical sensing solution, by incorporating Artilux’s CMOS-based ultra-sensitive SWIR GeSi sensors with the capability to integrate AFE (analog front end) and digital function into a single chip, together with high-performance VCSEL arrays at 940nm and 1380nm supplied by Lumentum.

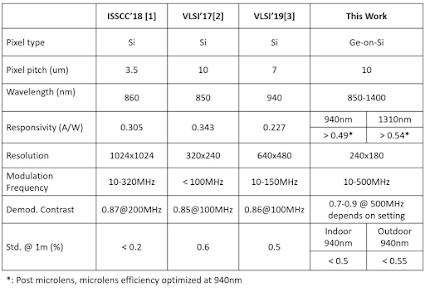

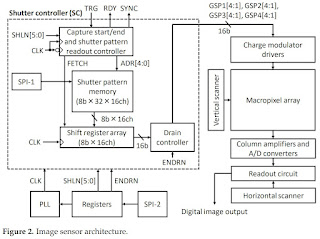

Although the press release does not mention any technical specifications it may be worth referring to an ISSCC paper from 2020 published by a team from Artilux that described a Ge-on-Si technology. The paper is titled "An Up-to-1400nm 500MHz Demodulated Time-of-Flight Image Sensor on a Ge-on-Si Platform" (https://doi.org/10.1109/ISSCC19947.2020.9063107).

Press Release: https://www.artiluxtech.com/resources/news/1014