Image Sensors World Go to the original article...

Toulouse University publishes a PhD thesis "Developing a method for modeling, characterizing and mitigating parasitic light sensitivity in global shutter CMOS image sensors" by Federico Pace.Thesis on Parasitic Light Sensitivity in Global Shutter Pixels

LiDAR with Entangled Photons

Image Sensors World Go to the original article...

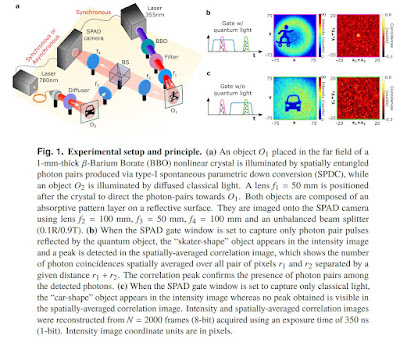

EPFL and Glasgow University publish an Optics Express paper "Light detection and ranging with entangled photons" by Jiuxuan Zhao, Ashley Lyons, Arin Can Ulku, Hugo Defienne, Daniele Faccio, and Edoardo Charbon.Polarization Event Camera

Image Sensors World Go to the original article...

AIT Austrian Institute of Technology, ETH Zurich, Western Sydney University, and University of Illinois at Urbana-Champaign publish a pre-print paper "Bio-inspired Polarization Event Camera" by Germain Haessig, Damien Joubert, Justin Haque, Yingkai Chen, Moritz Milde, Tobi Delbruck, and Viktor Gruev

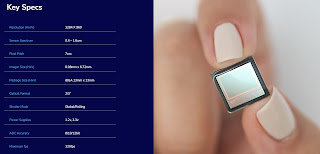

"The stomatopod (mantis shrimp) visual system has recently provided a blueprint for the design of paradigm-shifting polarization and multispectral imaging sensors, enabling solutions to challenging medical and remote sensing problems. However, these bioinspired sensors lack the high dynamic range (HDR) and asynchronous polarization vision capabilities of the stomatopod visual system, limiting temporal resolution to ~12 ms and dynamic range to ~ 72 dB. Here we present a novel stomatopod-inspired polarization camera which mimics the sustained and transient biological visual pathways to save power and sample data beyond the maximum Nyquist frame rate. This bio-inspired sensor simultaneously captures both synchronous intensity frames and asynchronous polarization brightness change information with sub-millisecond latencies over a million-fold range of illumination. Our PDAVIS camera is comprised of 346x260 pixels, organized in 2-by-2 macropixels, which filter the incoming light with four linear polarization filters offset by 45 degrees. Polarization information is reconstructed using both low cost and latency event-based algorithms and more accurate but slower deep neural networks. Our sensor is used to image HDR polarization scenes which vary at high speeds and to observe dynamical properties of single collagen fibers in bovine tendon under rapid cyclical loads."

SWIR Startup Trieye Collaborates with Automotive Tier 1 Supplier Hitachi Astemo

Image Sensors World Go to the original article...

PRNewswire: TriEye announces collaboration with Hitachi Astemo, Tier 1 automotive supplier of world-class products. Trieye's SEDAR (Spectrum Enhanced Detection And Ranging), has also received significant recognition when it was named CES 2022 Innovation Award Honoree, in the Vehicle Intelligence category.SeeDevice Focuses on SWIR Sensing and Joins John Deere’s 2022 Startup Collaborator Program

Image Sensors World Go to the original article...

GlobeNewswire: Deere & Company announces the companies that will be part of the 2022 cohort of their Startup Collaborator program, including SeeDevice. This program launched in 2019 to enhance and deepen its interaction with startup companies whose technology could add value for John Deere customers.Omnivision Unveils its New Logo

Image Sensors World Go to the original article...

Omnivision publishes short videos explaining its new logo:

UV Sensors in SOI Process

Image Sensors World Go to the original article...

Tower publishes a MDPI paper "Embedded UV Sensors in CMOS SOI Technology" by Michael Yampolsky, Evgeny Pikhay, and Yakov Roizin.Nanostructure Modifiers for Pixel Spectral Response

Image Sensors World Go to the original article...

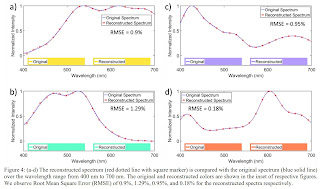

University of California – Davis and W&WSens publish an Arxiv.org paper "Reconstruction-based spectroscopy using CMOS image sensors with random photon-trapping nanostructure per sensor" by Ahasan Ahamed, Cesar Bartolo-Perez, Ahmed Sulaiman Mayet, Soroush Ghandiparsi, Lisa McPhillips, Shih-Yuan Wang, M. Saif Islam.Image Sensor Facts for Kids

Image Sensors World Go to the original article...

Kiddle, an encyclopedia for kids, publishes a page about image sensors:

Recent Videos: EnliTech, IPVM, Scantinel, Infiray, Omron, Ibeo

Image Sensors World Go to the original article...

EnliTech presents its CIS wafer testing solutions:Bankrupt HiDM is Acquired by Rongxin Semiconductor

Image Sensors World Go to the original article...

JW Insights reports that Rongxin Semiconductor acquired through an auction the bankrupt HiDM (Huaian Imaging Device manufacturing Corporation) in Huaian, Jiangsu province. Rongxin Semiconductor was founded in April 2021 in Ningbo, Jiangsu province. Rongxin paid RMB1.666 billion ($262.1 million) for HiDM assets.EI 2022 Course on Signal Processing for Photon-Limited Imaging

Image Sensors World Go to the original article...

Stanley Chan from Purdue University publishes slides for his 2022 Electronic Imaging short course "Signal Processing for Photon-Limited Imaging." Few slides out of 81:Actlight DPD Presentation

Image Sensors World Go to the original article...

Actlight CEO Serguei Okhonin presented at Photonics Spectra Conference held on-line last week "Dynamic Photodiodes: Unique Light-Sensing Technology with Tunable Sensitivity." The conference registration registration is free of charge. Few slides from the presentation:"Electrostatic Doping" for In-Sensor Computing

Image Sensors World Go to the original article...

Harvard University, KIST, Pusan University, and Samsung Advanced Institute of Technology publish a pre-print paper "In-sensor optoelectronic computing using electrostatically doped silicon" by Houk Jang, Henry Hinton, Woo-Bin Jung, Min-Hyun Lee, Changhyun Kim, Min Park, Seoung-Ki Lee, Seongjun Park, and Donhee Ham.Quanta Image Sensor Presentation

Image Sensors World Go to the original article...

Eric Fossum presented a keynote at Phototics Spectra Conference last week (free registration) "Quanta Image Sensors: Every Photon Counts, Even in a Smartphone." Few slides:TrendForce: 4-camera Modules in Smartphones Become Less Popular

Image Sensors World Go to the original article...

TrendForce analysis shows that 4 camera modules in smartphones become less popular:

"The trend towards multiple cameras started to shift in 2H21 after a few years of positive growth. The previous spike in the penetration rate of four camera modules was primarily incited by mid-range smart phone models in 2H20 when mobile phone brands sought to market their products through promoting more and more cameras. However, as consumers realized that the macro and depth camera usually featured on the third and fourth cameras were used less frequently and improvements in overall photo quality limited, the demand for four camera modules gradually subsided and mobile phone brands returned to fulfilling the actual needs of consumers.

Overall, TrendForce believes that the number of camera modules mounted on smartphones will no longer be the main focus of mobile phone brands, as focus will return to the real needs of consumers. Therefore, triple camera modules will remain the mainstream design for the next 2~3 years.

Although camera shipment growth has slowed, camera resolution continues to improve. Taking primary cameras as an example, the current mainstream design is 13-48 million pixels, accounting for more than 50% of cameras in 2021. In second place are products featuring 49-64 million pixels which accounted for more than 20% of cameras last year with penetration rate expected to increase to 23% in 2022. The third highest portion is 12 million pixel products, currently dominated by the iPhone and Samsung's flagship series."

Samsung Hyper-Spectral Sensor for Mobile Applications

Image Sensors World Go to the original article...

De Gruyter Nanophotonics publishes a paper "Compact meta-spectral image sensor for mobile applications" by Jaesoong Lee, Yeonsang Park, Hyochul Kim, Young-Zoon Yoon, Woong Ko, Kideock Bae, Jeong-Yub Lee, Hyuck Choo, and Young-Geun Roh from Samsung Advanced Institute of Technology and Chungnam National University.One More Terabee iToF Webinar

Image Sensors World Go to the original article...

Terabee publishes "An introduction to Time-of-Flight sensing" webinar:

Emberion Raises €6M

Image Sensors World Go to the original article...

Emberion, a developer of SWIR image sensors using nanomaterials, has raised €6M in funding from Nidoco AB, Tesi (Finnish Industry Investment Ltd) and Verso Capital.

“We are disrupting multiple imaging markets by extending the wavelength range at a significantly more affordable cost. Our revolutionary sensor is designed to meet the needs of even the most challenging machine vision applications, such as plastic sorting. We look forward to helping customers access new information at infrared wavelengths, thereby critically enhancing their applications beyond today’s capabilities,” said Jyrki Rosenberg, CEO, Emberion.

”We have created a new generation of image sensors using nanomaterials. Our high-performance industrial cameras can increase efficiency and reduce the loss of resources in many industrial processes. We innovate at all levels of camera design: nanomaterials, integrated circuit design, electronics, photonics and software. We are now stepping forward to expand our capacity to manufacture,” commented Tapani Ryhänen, CTO.

“We are appreciative of the high interest and trust towards our technology from investors and customers. With this funding, our next step is to increase our production capacity to be able to serve our customers’ needs. We will also intensify our efforts to further develop mid-wave infrared (MWIR) and broadband solutions to expand our offerings and to enhance the capabilities of our current VIS-SWIR product line,” added Rosenberg.

IDTechEx on SWIR Sensor Technologies

Image Sensors World Go to the original article...

Photonics Spectra conference held on-line this week (with free registration) features IDTechEx analyst Matthew Dyson presentation "Emerging Short-Wavelength Infrared Sensors." Few slides from the presentation:SWIR Multi-Spectral Sensor

Image Sensors World Go to the original article...

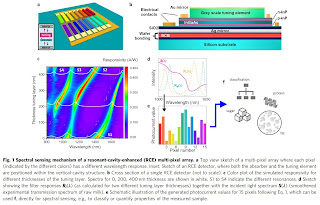

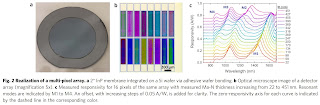

Phys.org: Nature publishes Eindhoven University's of Technology paper "Integrated near-infrared spectral sensing" by Kaylee D. Hakkel, Maurangelo Petruzzella, Fang Ou, Anne van Klinken, Francesco Pagliano, Tianran Liu, Rene P. J. van Veldhoven & Andrea Fiore.Infiray Presents its Visible and Thermal Camera Fusion for Automotive Applications

Image Sensors World Go to the original article...

Infiray presents its "new breakthrough on Automotive Night Vision:"Pixart Introduces Low-Power Intelligent Object Detecting Sensor

Image Sensors World Go to the original article...

It appears that integration of vision processor with image sensor is becoming a trend. First, Himax and Sony did it, now - Pixart.SiPM in 55nm Globalfoundries BCD Process

Image Sensors World Go to the original article...

EPFL, Globalfoundries, and KIST publish an Arxiv.org paper "On Analog Silicon Photomultipliers in Standard 55-nm BCD Technology for LiDAR Applications" by Jiuxuan Zhao, Tommaso Milanese, Francesco Gramuglia, Pouyan Keshavarzian, Shyue Seng Tan, Michelle Tng, Louis Lim, Vinit Dhulla, Elgin Quek, Myung-Jae Lee, and Edoardo Charbon.Sony Drone Features 10 Image Sensors

Image Sensors World Go to the original article...

Sony publishes an extended "Airpeak S1 developer interview: 2nd Sensing Edition." Few quotes:ST Presents 4.6um Pixel iToF Sensor

Image Sensors World Go to the original article...

ST presents its 4.6um iToF sensor at ESSCIRC 2021 that appears to be quite close to production, judging by the large team size: "4.6μm Low Power Indirect Time-of-Flight Pixel Achieving 88.5% Demodulation Contrast at 200MHz for 0.54MPix Depth Camera" by Cédric Tubert, Pascal Mellot, Yann Desprez, Celine Mas, Arnaud Authié, Laurent Simony, Grégory Bochet, Stephane Drouard, Jeremie Teyssier, Damien Miclo, Jean-Raphael Bezal, Thibault Augey, Franck Hingant, Thomas Bouchet, Blandine Roig, Aurélien Mazard, Raoul Vergara, Gabriel Mugny, Arnaud Tournier, Frédéric Lalanne, François Roy, Boris Rodrigues Goncalves, Matteo Vignetti, Pascal Fonteneau, Vincent Farys, François Agut, Joao Migue Melo Santos, David Hadden, Kevin Channon, Christopher Townsend, Bruce Rae, and Sara Pellegrini.

PMD-Infineon Advances in FSI iToF Sensors

Image Sensors World Go to the original article...

ISSCIRC 2021 paper and presentation "Advancements in Indirect Time of Flight Image Sensors in Front Side Illuminated CMOS" by M. Dielacher, M. Flatscher, R. Gabl, R. Gaggl, D. Offenberg, and J. Prima is available on-line. Few interesting slides:ORRAM Neuromorphic Vision Sensor

Image Sensors World Go to the original article...

Hong Kong Polytechnic University publishes a video explaining its Optoelectronic Resistive Memory-based vision sensor with image recognition capabilities:

LiDAR News: Voyant, Innoviz, Quanergy

Image Sensors World Go to the original article...

PRNewswire: Lidar-on-a-chip startup Voyant Photonics raises $15.4M in Series A. Voyant's LiDAR system, containing thousands of optical components fabricated on a single semiconductor chip, enables its customers to integrate an effective and exponentially more scalable LiDAR system than possible to date.Ge/MoS2 Broadband Detectors

Image Sensors World Go to the original article...

Science publishes a paper "Visible and infrared dual-band imaging via Ge/MoS2 van der Waals heterostructure" by Aujin Hwang, Minseong Park, Youngseo Park, Yeongseok Shim, Sukhyeong Youn, Chan-Ho Lee, Han Beom Jeong, Hu Young Jeong, Jiwon Chang, Kyusang Lee, Geonwook Yoo, Junseok Heo from Ajou University, Yonsei University, Soongsil University, KAIST, UNIST (Korea), and University of Virginia (USA).